CPU performance; Wall time, CPU time, wait time

Last time we looked at how a typical system deals with purely CPU-bound applications and how the webserver, OS and CPU cores work together to produce the maximum throughput for your system. We started by looking at how we measure the ‘speed’ of your application by taking the ‘wall time’ (i.e. the time it takes to run your app as measured by your wall clock) and then dividing it into ‘CPU time’ (I.e the amount of time the CPU is actually using cycles on your app) and ‘wait time’ (the amount of time the CPU is waiting for something to happen). In this post we’ll look at the wait time of an application so if you havent already done so you should read the first post on cpu time.

What is wait time?

Simply put the CPU is waiting around for something to happen. That’s not to say you have a feral CPU, rather you have told it to wait for something. For example it could be waiting for:

- A disk to read or write some data

- An event to trigger (perhaps from the user)

- Another process to return

- A database query to respond

- A response from a web-service or REST API

Probably the most common form of waiting in a web-app is when you issue a request across a network to retrieve some data, be that from a database or some sort of API. Once your app has established a connection to the remote server and initiated the request, the CPU doesnt really have to do anything; it’s just sat there waiting for the network to respond. As we will see, this kind of behaviour scales very differently to the CPU time we looked at last time.

Scaling Wait Time

To simulate waiting for a network resource we can create a simple script that ‘sleeps’:

<?php

usleep(200000);

This will make the CPU sleep for 200ms, that’s it! If you run this script using curl and time then you should see approximately 200ms reported:

rathers@geoff:~$ time curl http://127.0.0.1/wait.php real 0m0.214s user 0m0.009s sys 0m0.003s

If you remember from last time we had a formula that could work out the max requests per second for our application based on response time and number of cores:

Max Req/s = No Cores / Response Time

So again using my 4 core system we should expect to see 4 / 0.2 = 20 requests per second. Right? Well let’s find out! First let’s try it with a concurrency of 4, which gave us 20 req/s last time:

rathers@geoff:~$ ab -c 4 -n 100 http://127.0.0.1/wait.php ... Requests per second: 19.87 [#/sec] (mean)

So far so good! So last time even when we doubled the concurrency to 8 we still only managed 20 req/s. What about now?

rathers@geoff:~$ ab -c 8 -n 100 http://127.0.0.1/wait.php ... Requests per second: 39.72 [#/sec] (mean)

Much better! So how high will this go? Will req/s keep on doubling as I double the concurrency?

rathers@geoff:~$ ab -c 32 -n 1000 http://127.0.0.1/wait.php ... Requests per second: 158.22 [#/sec] (mean)

rathers@geoff:~$ ab -c 128 -n 10000 http://127.0.0.1/wait.php ... Requests per second: 627.36 [#/sec] (mean)

rathers@geoff:~$ ab -c 256 -n 10000 http://127.0.0.1/wait.php ... Requests per second: 1189.24 [#/sec] (mean)

rathers@geoff:~$ ab -c 512 -n 10000 http://127.0.0.1/wait.php ... Requests per second: 663.02 [#/sec] (mean)

Bascially, yes! We have linear scalability!

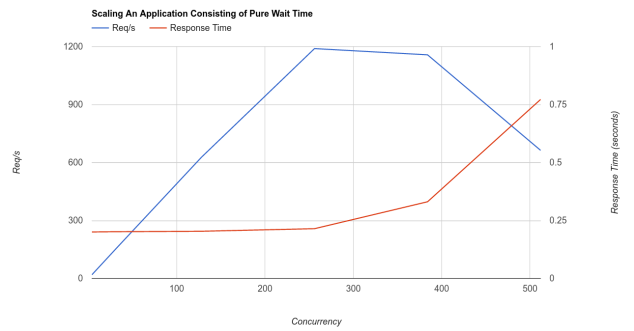

This graph shows nicely shows what is going on (click the graph to go to the raw data). The CPU is having no problems whatsoever initially; response time remains a constant 200ms and req/s scale up linearly. That is until we hit a concurrency of 256. At this point the req/s starts tailing off and response times starts increasing, both exponentially!

If you remember from last time, when we had reached our maximum throughput of 20 req/s our CPU cores were totally maxed out and we could see that in dstat. This time even at nearly 1200 req/s my CPU cores are still nowhere near maxed out as this dstat trace shows:

-------cpu0-usage--------------cpu1-usage--------------cpu2-usage--------------cpu3-usage------ usr sys idl wai hiq siq:usr sys idl wai hiq siq:usr sys idl wai hiq siq:usr sys idl wai hiq siq| 23 9 65 0 1 2: 22 9 65 0 1 3: 25 9 63 0 1 2: 25 8 64 0 1 2| 19 12 64 0 2 3: 16 12 66 0 1 5: 20 11 65 0 1 3: 21 10 64 0 1 4| 20 12 62 0 1 5: 26 12 57 0 1 4: 31 12 48 3 1 4: 24 12 60 0 1 3| 18 22 55 0 2 3: 23 12 61 0 1 3: 21 11 61 0 2 5: 21 14 60 0 1 5| 31 16 47 0 2 4: 29 11 55 0 1 4: 26 13 56 0 1 4: 27 13 54 0 2 4| 21 13 59 0 2 5: 22 12 60 0 2 4: 20 15 59 0 1 5: 20 14 60 0 1 5| 29 13 53 0 1 4: 29 13 53 0 1 4: 28 12 55 0 1 4: 24 11 60 0 1 4| 35 11 49 0 2 4: 23 13 59 0 1 4: 31 11 53 0 1 4: 23 12 59 1 1 4|

All 4 cores are around 60% idle! Hopefully this illustrates how different CPU-time and wait-time are.

The Bottleneck

So what is so special about the number 256? Things were exactly as you might expect up to this number; the CPU was quite happy to sit and do 256 waits in parallel, waiting is afterall pretty easy to do! Even I could probably manage to do 256 lots of nothing in parallel! The answer is nothing really to do with the application, or the number of CPUs or RAM or anything like that; it’s simply an apache config value. The apache config on my system limits apache to 256 worker processes. This means as soon as you ask for more than 256 requests in parallel things start to go wrong as all the worker processes are busy and cannot accept the extra work.

Optimising Webserver Performance for Wait Time

As we’ve seen so far because our application is just waiting we are limited by the number of processes that the webserver will give us. This value will become the maximum number of requests that our application can handle concurrently.

So how do we scale further? Well it really depends on your webserver. In all the examples so far I have been using the Apache webserver. To scale our waiting app further we need to look into some of the internals of Apache and something that Apache calls the ‘Multi-Processing Module’ or MPM.

Apache MPMs

If you’re using Apache in a standard configuration, the likelihood is that you’re using the ‘prefork’ MPM already. This is a simple but robust MPM that uses a single master process that forks worker processes. Each worker process is single threaded.

The distinction between threads and processes is important here. A process is a stand alone task governed by the OS. It is given its own block of memory that only it can access and if it tries to access the memory belonging to another process the OS will shut it down. This is known as a segmentation fault (or segfault, illegal operation, crash etc). Many processes can run in parallel as the OS will manage swapping processes on and off the various CPU cores based on demand and relative process priorities. A thread is analogous to a process but it is a stream of operation within the process itself. The process is responsible for managing the threads within it. So the OS can manage multiple processes and a process can manage multiple threads within it.

So when we say prefork MPM produces single threaded processes this means that each process can only do one thing at once. Therefore to serve an HTTP request, a spare process must be available to accept and process the request. Initially apache will start with very few worker processes (5 on my system). When it detects that it is running out of processes it will spawn more. Because it is computationally expensive to create more processes apache limits the rate at which more are spawned. However the rate increases exponentially until the number of processes reaches a hard limit set by the MaxServers directive (256 on my system). You can see this happen quite clearly if you use the tools I gave you in my previous post. If you use apachebench to batter apache with loads of requests and monitor the number of processes running you will see it slowly creep up, then the rate of increase will keep rising until eventually the number of processes plateaus.

Tuning prefork MPM

For the most part apache will take care of itself. The only value you need to really worry about is the MaxClients directive which as we’ve seen caps the number of worker processes that can be spawned. Set this value too low and you wont be able to serve enough traffic, set it too high and you’ll run out of RAM. It also depends on your application and your machine as to how you tune this value. If you recall our CPU-limited example from last time you’ll remember that no matter how hard we tried we couldnt squeeze more than 20 req/s out of it. If this were all we had to serve there wouldnt be much point in setting MaxClients much beyond 20 as the CPU simply cant cope with more than 20 requests in parallel. Allowing it to go higher is only going to make things worse. Now consider our wait-limited example above. This is happily going up to 256 req/s and this is in fact limited by MaxClients itself. So it seems logical to allow MaxClients to go higher if we have enough RAM to do so.

Other Apache MPMs

The main MPMs besides prefork are called worker, event and winnt. As event is experimental and winnt is for use on Windows (and running servers on Windows is the reserve of the insane) we shall look briefly at worker MPM.

Worker MPM is essentially the same as prefork MPM in terms of how the apache processes are spawned and killed but each process can have multiple threads running within it. This allows you to have fewer processes running to achieve the same level of concurrency as with prefork. The problem with this approach though is that many of the scripting languages that can be embedded into apache (PHP in our case) aren’t guaranteed to be thread-safe and so to use worker MPM you need to interface with your scripting language over FastCGI or similar.

Worker MPM is not a panacea however, you will still have a maximum number of threads that can be in operation at anyone time as set by your apache config. For a wait-heavy application you will probably be able to squeeze more out of your server using worker than with prefork but you’ll still have a limit.

Event based servers

If your application does a lot of waiting then you might want to consider using an event driven platform such as node.js. The event-driven architecture takes a fundamentally different approach to dealing with having to wait for things to happen. Rather then make a whole process or thread block until something has happened you instead register event handlers. When something happens that you are interested in (e.g. your API query has got a response) then an event is raised and any registered event handlers are executed. This gives a totally non-linear, asynchronous flow and makes good use of system resources when dealing with lots of waiting around.

Real-world Applications

The examples I have used across this post and the last were deliberately simple and one-dimensional but real-world applications are much more complicated. That said they are essentially made of these two components; time you are hammering the CPU and time when you’re waiting around. Hopefully you now have an understanding of the effect these two things have on your server and how to recognise them.

Optimising Applications

I started my previous post with the hypothetical situation of having to support 1000 req/s for a given application. So if you benchmark your app and you’re only getting 500 req/s out of it, how do you squeeze the extra performance out? Well you first need to work out what your limits are. If at that 500 req/s limit your CPUs are maxed out then you’re probably limited by CPU time. If the CPUs aren’t maxed out but all your apache processes are busy then you are likely limited by wait time.

The details of specific optimisations are beyond the scope of this article but to reduce your CPU time you’re going to have to look at using less CPU cycles somehow. That maybe by streamlining your code, efficiency improvements, caching or maybe even compiling your PHP code. To reduce your wait time you’ll need to look at increasing the speed of whatever is holding you up. That could be a disk raid setup, database or query optimisation, faster network between you and an API server etc, or if you can you should look at caching the output of the slow parts so you dont have to wait for them all the time.

Premature Optimisation

It is important to know what the limits of your application are and the nature of them before you start to optimise it. Imagine your code does lots of disk writes and is not meeting your non-functional requirements for throughput in req/s. You might at first think you are CPU-time limited so you start stripping out for loops and using more efficient algorithms in your code. It wont make any difference and is arguably wasted effort. If you monitored your server while benchmarking you should have been able to see the massive amounts going onto the disk and you would have seen the write speed of the disk plateau as you push the load upwards. So what you should really be doing is looking at is making the disks go faster!

Conclusion

We’ve seen how CPUs and webservers respond to high load in terms of being asked to do a lot of stuff at once and also being told to wait around for other stuff to happen. We’ve looked at a few simple tools to monitor what your system is doing and how to optimise some aspects of the server for your situation. Next time you are asked to make something ‘faster’, ask yourself what that actually means in terms of your application and be sure to establish exactly what the limiting factors are before you try and optimise around them.